Back to Home

CONTROLNET POSE REFERENCE

Control human poses in AI-generated images with precision using skeleton keypoint detection. Generate consistent character poses for animation, game design, and creative projects.

Precise Pose Control

18+ Keypoint Detection

Multi-Person Support

What is ControlNet?

ControlNet is an advanced neural network technique that gives you precise control over AI image generation by using additional conditioning inputs. When combined with pose detection (OpenPose), it allows you to guide the exact positioning of human bodies, hands, and faces in your generated images.

Skeleton Keypoints

Extract 18+ body keypoints including head, shoulders, elbows, wrists, hips, knees, and ankles for precise pose mapping

Fast & Accurate

Real-time pose detection that accurately reproduces poses without copying other details like outfits or backgrounds

Multi-Person Detection

Simultaneously detect and control poses for multiple people in a single image

How ControlNet Pose Works

1

Upload Pose Reference

Provide a skeleton pose image (stick figure or keypoints format) or use an existing photo to extract the pose

2

Keypoint Extraction

OpenPose detects and maps key body positions, creating a control map with precise joint locations

3

Generate with Control

Combine your text prompt with the pose control map to generate images that match your exact pose requirements

Pose Accuracy Tests

We tested Qwen's ControlNet implementation across various pose complexities to measure accuracy and usability for real-world creative workflows. Results show excellent performance for animation, character design, and game development.

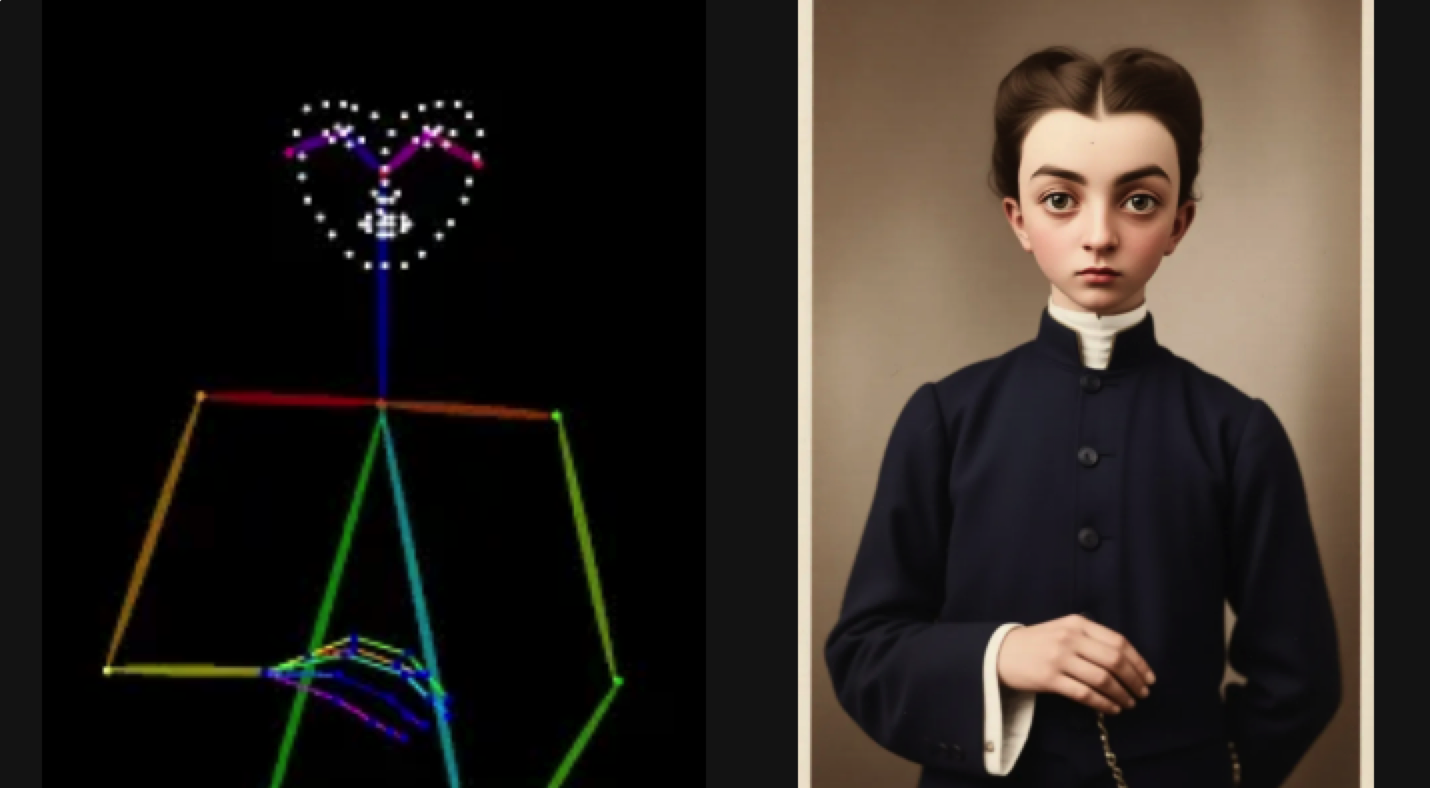

Test 1

Simple Standing Pose

Prompt

Use it as a reference, A woman in the same position

Reference Pose

Generated Result

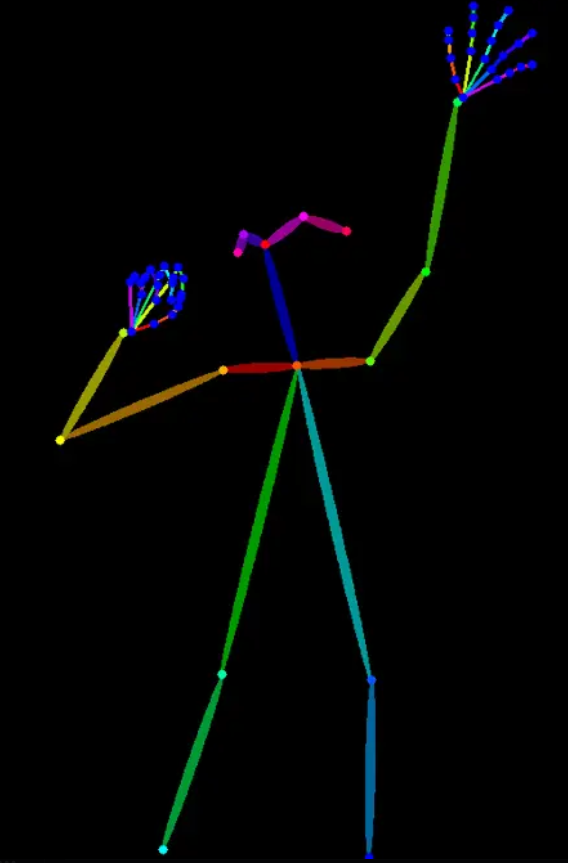

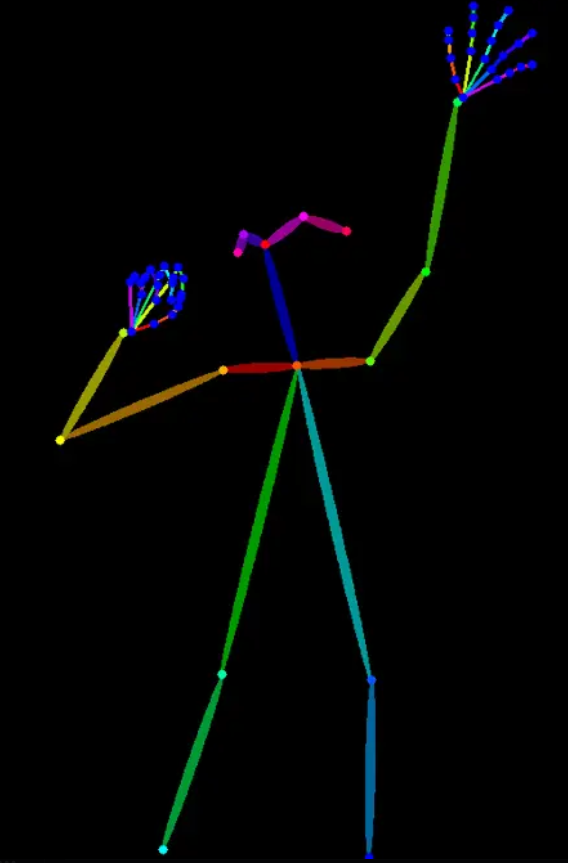

Test 2

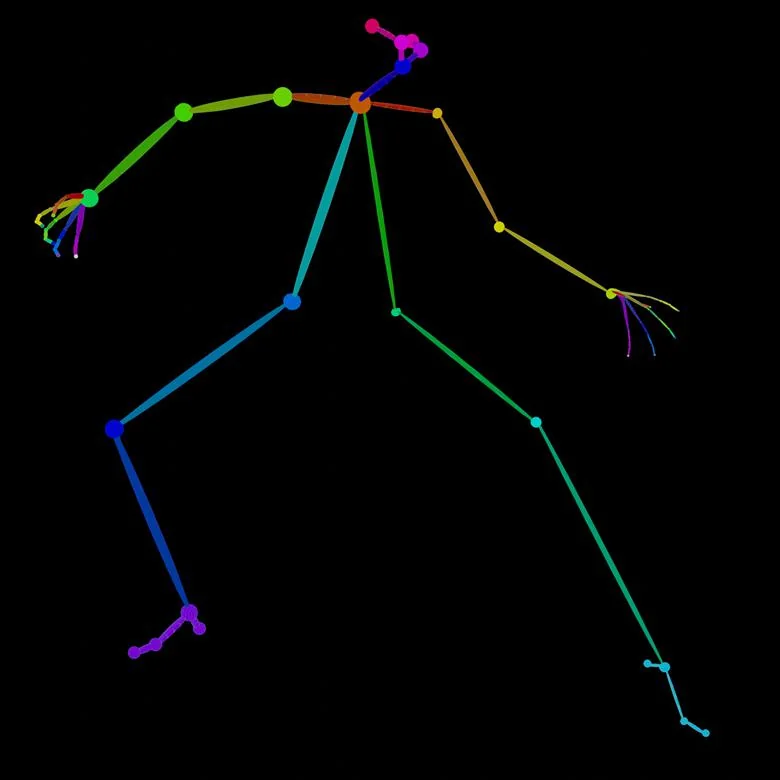

Dynamic Action Pose

Prompt

an anime style movie scene samurai in a fight position as reference

Reference Pose

Generated Result

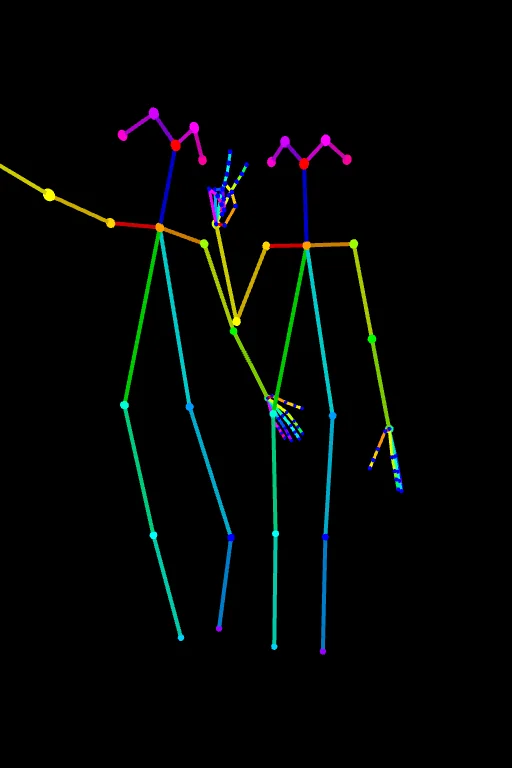

Test 3

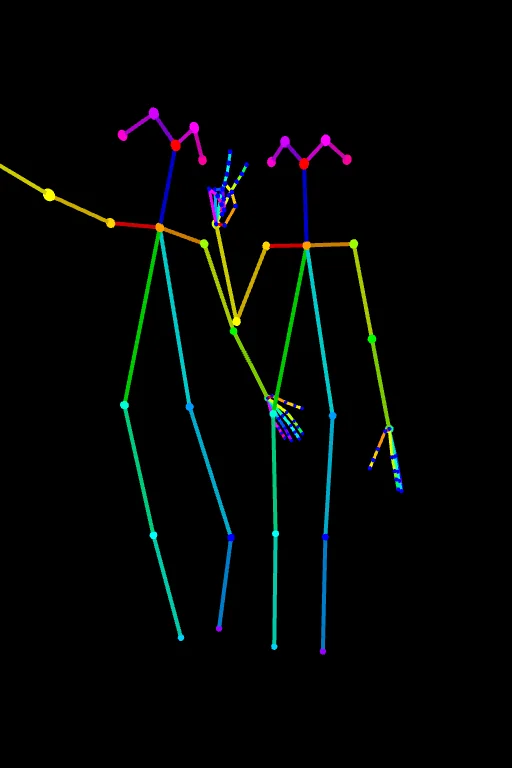

Multi-Person with Overlapping Limbs

Prompt

Use the reference pose to create a photo of 2 friends taking a photo, make sure of the hands positions

Reference Pose

Generated Result 1

Generated Result 2

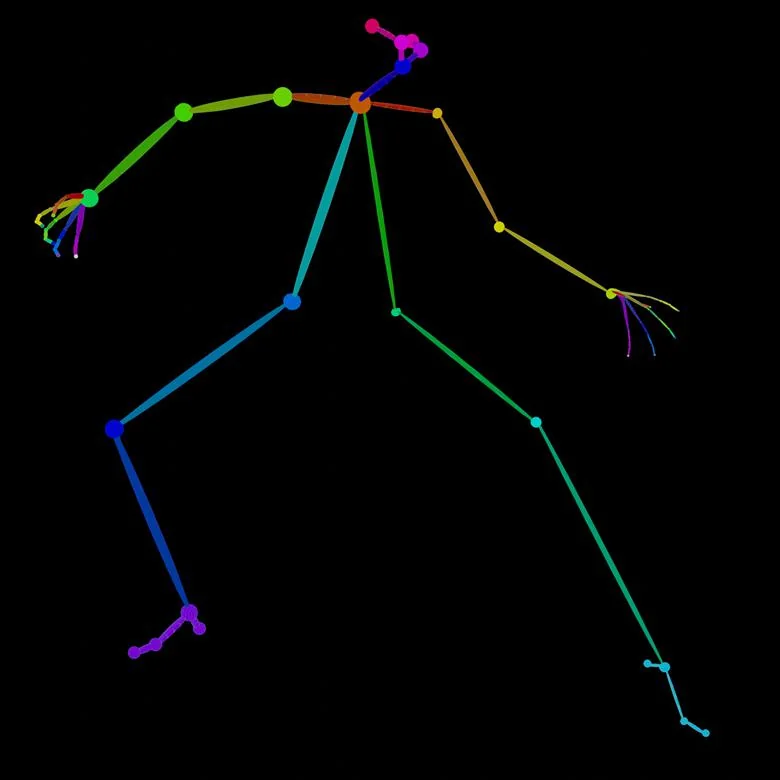

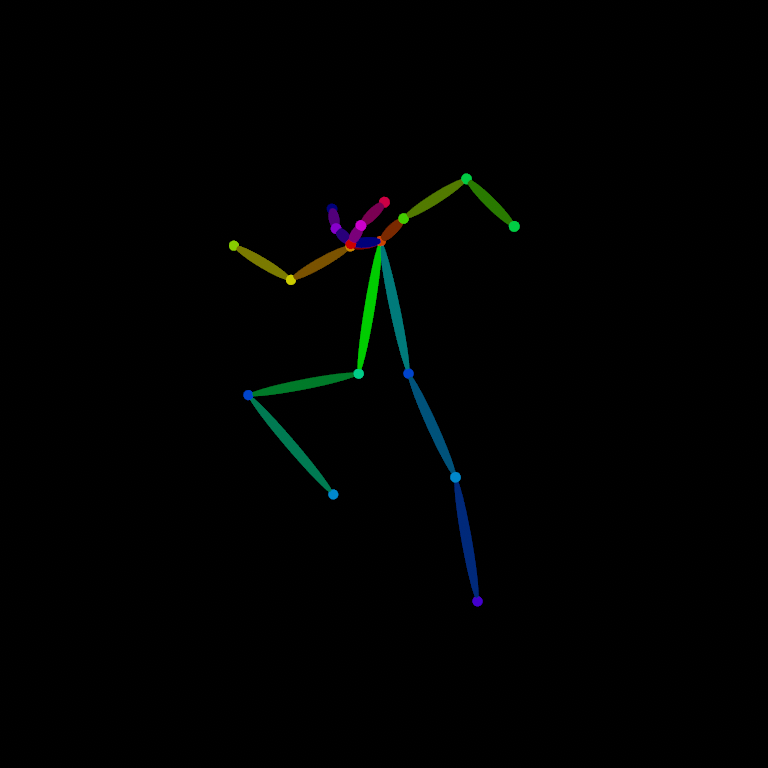

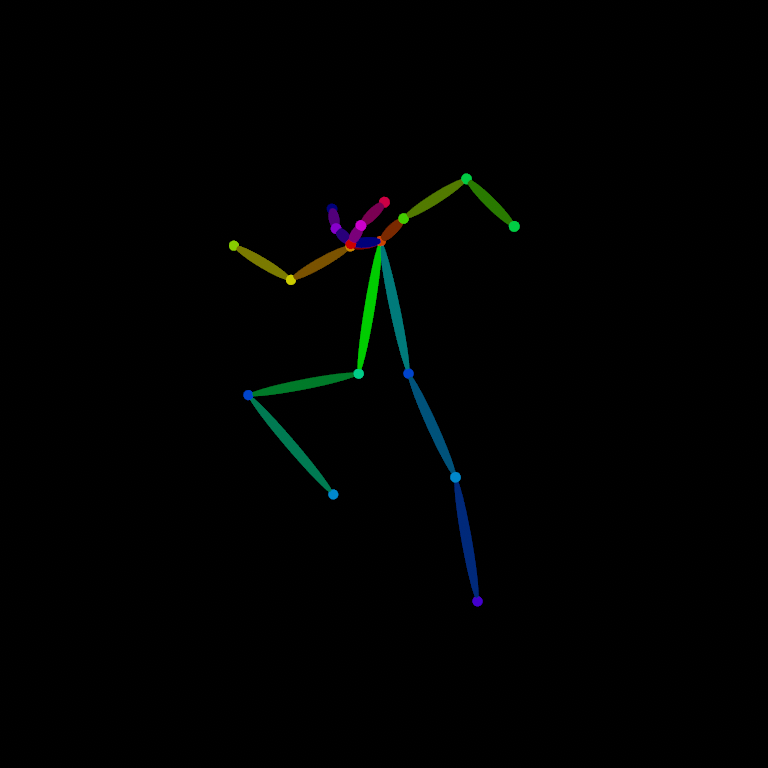

Test 4

Complex Multi-Directional Limbs

Prompt

visualize in the same position a woman in a gym, with a training suit

Reference Pose

Generated Result

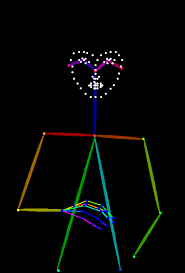

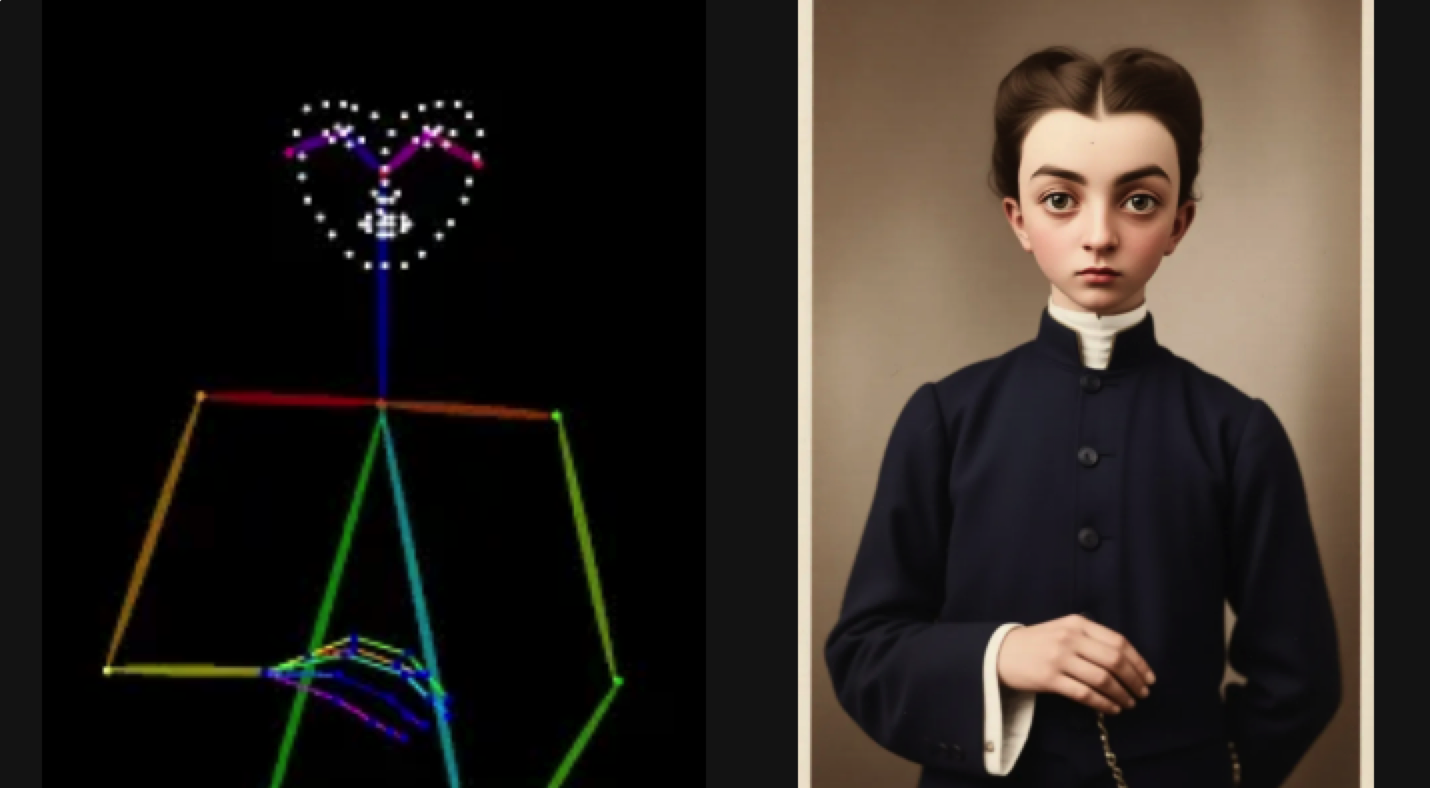

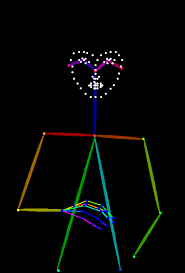

Test 5

Portrait with Facial Structure

Prompt

Visualize as a man portrait

Reference Pose

Generated Result

Best Practices & Tips

✓ When ControlNet Works Best

- Clear, balanced poses with distinct keypoints

- Static or medium-dynamic poses (standing, walking, simple actions)

- Simple backgrounds that don't interfere with pose detection

- Detailed text prompts specifying clothing and environment

- Single or well-separated multiple subjects

⚠ Limitations to Consider

- Accuracy drops with heavily overlapping limbs

- Extreme foreshortening may be less precise

- Very complex multi-person scenes (3+ people) may need iteration

- Unusual or abstract poses may be harder to reproduce

- To get the image without text, simply include (without text) in the prompt